Snowflake Gen 2 vs Gen 1 + Databricks SQL Performance Comparison (As Of May, 2025)

A Comprehensive Battery Of Compute Performance Tests On Nearly 24B Rows Of Data

Snowflake recently released their "Gen 2" warehouses, claiming improved performance speed and cost performance, as long as you are willing to use a slightly more expensive warehouse. So, I put those claims to a test, plus added a fun twist: comparing performance to some Databricks SQL Serverless Warehouses.

For my testing, I used the dataset generator I previously published, and tuned the # of orders to give about 24B total rows (note: not order count, which is the parameter on the dataset generator) for the facts table. You can find that here: https://github.com/JosueBogran/coffeeshopdatagenerator

Cloud of choice? AWS. Snowflake Gen 2 costs are slightly less on Azure, but all my testing was done on AWS instances, and thus, reporting that cost accordingly.

I used mostly the same queries as in the past, plus added a few more (code for all queries will be published at the end of the article). In the past, the dataset tested contained about 3B rows or so, so definitely there are new pieces to this test. Note: I did not pick and choose which queries to keep or not. For example, some queries have virtually the same executing patterns but different values, and this tended to favor Snowflake, but I kept them in there because they were part of the tests.

Disclaimer: I am a Databricks MVP, Technical Council member, and work for a Databricks partnered SI, zeb. That said, all of the content here has been the result of my independently led tests, without any person or organization telling me what to test or whether I should release the results or not. My role at zeb graciously grants full editorial freedom on the topics I pursue to talk about. As always, don't just take my word for it, test things out yourself!

I will gladly welcome any CONSTRUCTIVE conversations.

General Technical Concepts Tested

Note: I did use ChatGPT to help summarize this portion. Running low on coffee, and, is tech content even relevant today if you don't say "AI" at least one?

Windows Functions: AVG(), OVER(), SUM(), RANK(), DENSE_RANK(). In queries 1, 2, 3, 5, 9, 10, 13, 14, and 15.

Time-Based Aggregation: DATE_TRUNC, YEAR, filtering by a date. In queries 2, 4, 6, 7, 8, 10, 15, and 16.

Group By + Aggregation: SUM, COUNT, AVG, complex derived columns in all, given the large dataset.

Multi-Table Joins: All queries.

Derived Metrics: Profit, margin, YoY $ change (LAG, CASE, math ops). In queries 4 and 15.

Write Operations: CREATE OR REPLACE TABLE AS. Queries 6, 10, and 15.

Filters and Conditions: 11-14

Ranking / Top-N: RANK, DENSE_RANK with WHERE rank <=N. Queries 2, 3, 8, 11-14, and 16.

All tests were done on warm warehouses, in consecutive order. Snowflake's SQL Warehouses have a 1 second warmup vs about 4 seconds for Databricks' SQL Servereless.

General Summary - Non Technical

Snowflake XL Gen 2 vs Gen 1

Hourly rates: Snowflake XL Gen 1 = $48, Snowflake XL Gen 2 = $64.8

Snowflake's Gen 2 is generally an improvement over Gen 1. Comparing Snowflake's XL Gen 2 vs Gen 1 shows that 16 queries were faster and 9 were cheaper + 3 that were within marginal variability range to be ties.

Gen 2 was $22.39 for all queries vs $20.72 Gen 1, suggesting that the 4 queries were Gen 1 did confidently outperform Gen 2 on costs were significant.

Gen 2 improved execution speed by about 20%, which is very solid.

I would personally opt for Gen 2, also expecting that future performance improvements from Snowflake will be more noticeable on it.

Databricks L vs Snowflake XL Gen 1/2

I've heard the claim that Databricks L is really a Snowflake XL. In the end, warehouse sizes such as "XL" or "L" are just marketing tools, with a combination of cost + performance being what matters to customers, but, let's entertain this matter.

Hourly burn rates for these configurations: Databricks L = $28, Snowflake XL Gen 1 = $48, Snowflake XL Gen 2 = $64.8.

Databricks' Large and Snowflake's XL Gen 1 evenly split the wins in terms of time, with 8 queries won each, but Databricks' option won 13 out of the 16 queries in terms of costs. Overall, $13.89 for Databricks L vs $20.72 on Snowflake's XL Gen 1.

Educational purposes only: Now, Databricks' Large vs Snowflake's XL Gen 2, which is really an unfair matchup in terms of base costs ($64.8 x hour for Snowflake's vs $28 x hour for Databricks') is an interesting one: Snowflake won on 13 out of 16 queries on performance, but only won 4 in terms of costs. That's $13.89 for Databricks vs $22.39 for Snowflake's About 50% improvement in speed at roughly a 30% premium.

Databricks L vs Snowflake L Gen 2

Burn rates of $28 and $32.4 respectively.

Performance wise, Databricks won 10 out of 16 queries and 12 out of 16 cost wise. Total cost of $13.89 for Databricks L vs Snowflake's L Gen 2 at $22.48.

Databricks XL vs Snowflake XL Gen 1/2

Cost-wise, this is the most evenly balanced comparison on a x hourly rate basis.

When looking at this comparison, the cost per hour: Databricks XL = $56, Snowflake XL Gen 1 = $48, Snowflake Gen 2 = $64.8

Databricks' XL beat Snowflake's XL Gen 1 in terms of performance 13 out of 16 times. Cost wise, Databricks beat 11 out of 16 times, with one additional query appearing within margin of error for a potential tie. $15.85 for Databricks vs $20.72 for Snowflake.

On Databricks XL vs Snowflake XL Gen 2, it was 9 wins for Databricks plus one virtual tie in terms of performance. About 10 wins for Databricks vs Snowflake's offering in terms of costs, leading to about $15.85 vs $22.39.

Results

Aggregated - Execution Time

Aggregated - Execution $

Execution Time - All

Execution $ - All

Execution Time - Without Query 10 & 16

Execution $ - Without Query 10 & 16

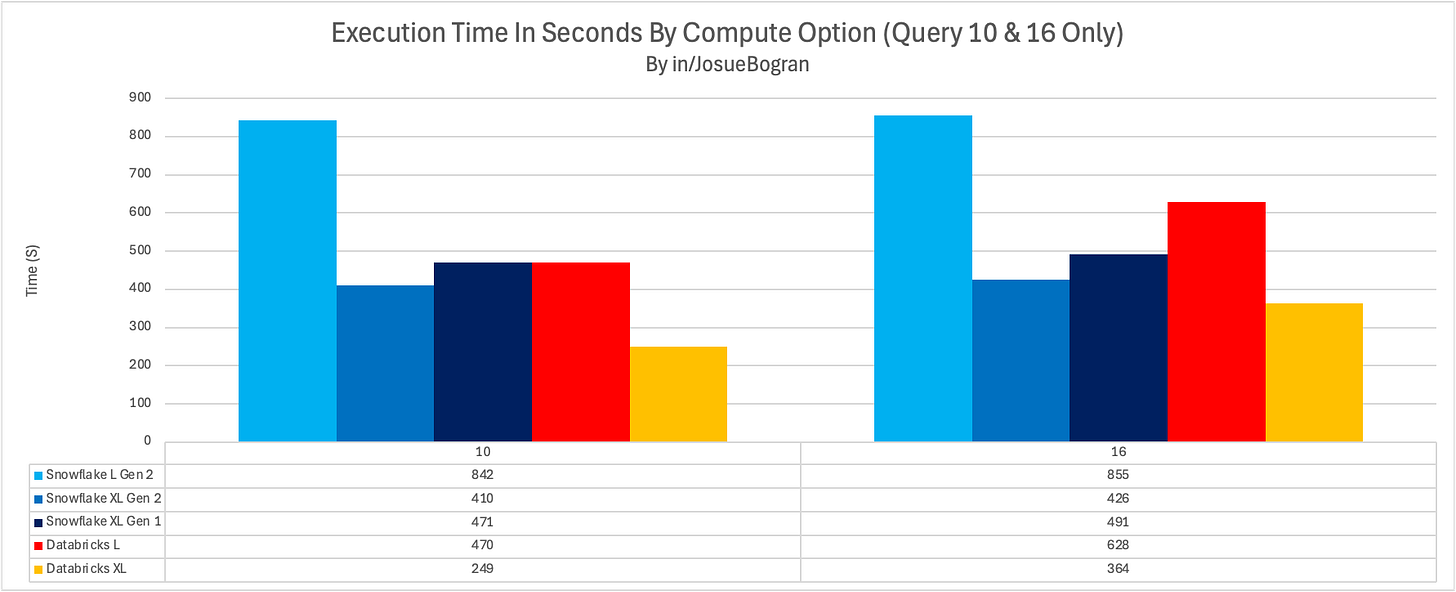

Execution Time - Query 10 & 16

Execution $ - Query 10 & 16

Recreating Tests

Dataset Generator: https://github.com/JosueBogran/coffeeshopdatagenerator

Code:

--Query 01

SELECT

f.order_date,

l.city,

SUM(f.sales_amount) AS total_sales,

AVG(SUM(f.sales_amount)) OVER (

PARTITION BY l.city

ORDER BY f.order_date

ROWS BETWEEN 6 PRECEDING AND CURRENT ROW

) AS rolling_7day_avg

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

GROUP BY

f.order_date,

l.city

ORDER BY

l.city,

f.order_date;

--Query 02

WITH monthly_sales AS (

SELECT

DATE_TRUNC('month', f.order_date) AS sales_month,

f.product_name,

SUM(f.sales_amount) AS total_sales

FROM sweetcoffeetree.coffeesales15b.fact_sales f

GROUP BY

DATE_TRUNC('month', f.order_date),

f.product_name

)

SELECT

sales_month,

product_name,

total_sales,

RANK() OVER (PARTITION BY sales_month ORDER BY total_sales DESC) AS sales_rank

FROM monthly_sales

ORDER BY sales_month, sales_rank;

--Query 03

WITH season_discount AS (

SELECT

l.city,

l.state,

f.season,

AVG(f.discount_percentage) AS avg_discount

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

GROUP BY

l.city,

l.state,

f.season

)

SELECT

city,

state,

season,

avg_discount,

discount_rank

FROM (

SELECT

city,

state,

season,

avg_discount,

DENSE_RANK() OVER (PARTITION BY season ORDER BY avg_discount DESC) AS discount_rank

FROM season_discount

) t

WHERE discount_rank <= 3

ORDER BY season, discount_rank;

--Query 04

SELECT

f.order_date,

f.product_name,

p.standard_price,

p.standard_cost,

SUM(f.quantity) AS total_quantity_sold,

SUM(f.sales_amount) AS total_sales_amount,

(p.standard_price - p.standard_cost) * SUM(f.quantity) AS theoretical_margin

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_products p

ON f.product_name = p.name

AND f.order_date BETWEEN p.from_date AND p.to_date

GROUP BY

f.order_date,

f.product_name,

p.standard_price,

p.standard_cost

ORDER BY

f.order_date,

f.product_name;

--Query 05

WITH daily_city_qty AS (

SELECT

f.order_date,

l.city,

SUM(f.quantity) AS daily_qty

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

GROUP BY

f.order_date,

l.city

)

SELECT

order_date,

city,

daily_qty,

SUM(daily_qty) OVER (

PARTITION BY city

ORDER BY order_date

ROWS BETWEEN 29 PRECEDING AND CURRENT ROW

) AS rolling_30day_qty

FROM daily_city_qty

ORDER BY city, order_date;

--Query 06

CREATE OR REPLACE TABLE sweetcoffeetree.coffeesales15b.query06 AS

WITH monthly_cat AS (

SELECT

DATE_TRUNC('month', f.order_date) AS sales_month,

p.category,

SUM(f.sales_amount) AS monthly_revenue

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_products p

ON f.product_name = p.name

AND f.order_date BETWEEN p.from_date AND p.to_date

GROUP BY

DATE_TRUNC('month', f.order_date),

p.category

)

SELECT

sales_month,

category,

monthly_revenue

FROM monthly_cat;

--Query 07

WITH yearly_sales AS (

SELECT

l.location_id,

l.city,

l.state,

YEAR(f.order_date) AS sales_year,

SUM(f.sales_amount) AS total_sales_year

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

GROUP BY

l.location_id,

l.city,

l.state,

YEAR(f.order_date)

)

SELECT

city,

state,

SUM(CASE WHEN sales_year = 2023 THEN total_sales_year ELSE 0 END) AS sales_2023,

SUM(CASE WHEN sales_year = 2024 THEN total_sales_year ELSE 0 END) AS sales_2024,

(SUM(CASE WHEN sales_year = 2024 THEN total_sales_year ELSE 0 END)

- SUM(CASE WHEN sales_year = 2023 THEN total_sales_year ELSE 0 END)) AS yoy_diff

FROM yearly_sales

GROUP BY

city,

state

ORDER BY

city,

state;

--Query 08

WITH city_quarter_subcat AS (

SELECT

l.city,

DATE_TRUNC('quarter', f.order_date) AS sales_quarter,

p.subcategory,

SUM(f.sales_amount) AS total_sales

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

JOIN sweetcoffeetree.coffeesales15b.dim_products p

ON f.product_name = p.name

AND f.order_date BETWEEN p.from_date AND p.to_date

GROUP BY

l.city,

DATE_TRUNC('quarter', f.order_date),

p.subcategory

)

SELECT

city,

sales_quarter,

subcategory,

total_sales,

RANK() OVER (PARTITION BY city, sales_quarter ORDER BY total_sales DESC) AS subcat_rank

FROM city_quarter_subcat

ORDER BY city, sales_quarter, subcat_rank;

--Query 09

WITH daily_discount AS (

SELECT

l.city,

f.order_date,

AVG(f.discount_percentage) AS avg_discount

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

GROUP BY

l.city,

f.order_date

)

SELECT

city,

order_date,

avg_discount,

AVG(avg_discount) OVER (

PARTITION BY city

ORDER BY order_date

ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW

) AS cumulative_avg_discount

FROM daily_discount

ORDER BY city, order_date;

--Query 10

CREATE OR REPLACE TABLE

sweetcoffeetree.coffeesales15b.query10 AS

WITH daily_orders AS (

SELECT

f.order_date,

l.city,

COUNT(DISTINCT f.order_id) AS daily_distinct_orders

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

GROUP BY

f.order_date,

l.city

)

SELECT

order_date,

city,

daily_distinct_orders,

SUM(daily_distinct_orders) OVER (

PARTITION BY city

ORDER BY order_date

ROWS BETWEEN 89 PRECEDING AND CURRENT ROW

) AS rolling_90d_distinct_orders

FROM daily_orders

ORDER BY city, order_date;

--Query 11

WITH city_quarter_subcat AS (

SELECT

l.city,

DATE_TRUNC('quarter', f.order_date) AS sales_quarter,

p.subcategory,

SUM(f.sales_amount) AS total_sales

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

JOIN sweetcoffeetree.coffeesales15b.dim_products p

ON f.product_name = p.name

AND f.order_date BETWEEN p.from_date AND p.to_date

WHERE l.city IN ('Charlotte', 'Houston')

GROUP BY

l.city,

DATE_TRUNC('quarter', f.order_date),

p.subcategory

)

SELECT

city,

sales_quarter,

subcategory,

total_sales,

RANK() OVER (PARTITION BY city, sales_quarter ORDER BY total_sales DESC) AS subcat_rank

FROM city_quarter_subcat

ORDER BY city, sales_quarter, subcat_rank;

--Query 12

WITH city_quarter_subcat AS (

SELECT

l.city,

DATE_TRUNC('quarter', f.order_date) AS sales_quarter,

p.subcategory,

SUM(f.sales_amount) AS total_sales

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

JOIN sweetcoffeetree.coffeesales15b.dim_products p

ON f.product_name = p.name

AND f.order_date BETWEEN p.from_date AND p.to_date

WHERE l.city IN ('Charlotte', 'Houston')

AND DATE_TRUNC('quarter', f.order_date) IN (

DATE('2023-01-01'), DATE('2023-04-01'),

DATE('2024-01-01'), DATE('2024-04-01')

)

GROUP BY

l.city,

DATE_TRUNC('quarter', f.order_date),

p.subcategory

)

SELECT

city,

sales_quarter,

subcategory,

total_sales,

RANK() OVER (PARTITION BY city, sales_quarter ORDER BY total_sales DESC) AS subcat_rank

FROM city_quarter_subcat

ORDER BY city, sales_quarter, subcat_rank;

--Query 13

WITH city_quarter_subcat AS (

SELECT

l.city,

DATE_TRUNC('quarter', f.order_date) AS sales_quarter,

p.subcategory,

SUM(f.sales_amount) AS total_sales

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

JOIN sweetcoffeetree.coffeesales15b.dim_products p

ON f.product_name = p.name

AND f.order_date BETWEEN p.from_date AND p.to_date

WHERE l.city = 'Austin'

AND DATE_TRUNC('quarter', f.order_date) IN (

DATE('2023-01-01'), DATE('2023-04-01'),

DATE('2024-01-01'), DATE('2024-04-01')

)

GROUP BY

l.city,

DATE_TRUNC('quarter', f.order_date),

p.subcategory

)

SELECT

city,

sales_quarter,

subcategory,

total_sales,

RANK() OVER (PARTITION BY city, sales_quarter ORDER BY total_sales DESC) AS subcat_rank

FROM city_quarter_subcat

ORDER BY city, sales_quarter, subcat_rank;

--Query 14

WITH city_quarter_subcat AS (

SELECT

l.city,

DATE_TRUNC('quarter', f.order_date) AS sales_quarter,

p.subcategory,

SUM(f.sales_amount) AS total_sales

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

JOIN sweetcoffeetree.coffeesales15b.dim_products p

ON f.product_name = p.name

AND f.order_date BETWEEN p.from_date AND p.to_date

WHERE DATE_TRUNC('quarter', f.order_date) IN (

DATE('2023-01-01'), DATE('2023-04-01'),

DATE('2024-01-01'), DATE('2024-04-01')

)

GROUP BY

l.city,

DATE_TRUNC('quarter', f.order_date),

p.subcategory

)

SELECT

city,

sales_quarter,

subcategory,

total_sales,

RANK() OVER (PARTITION BY city, sales_quarter ORDER BY total_sales DESC) AS subcat_rank

FROM city_quarter_subcat

ORDER BY city, sales_quarter, subcat_rank;

--Query 15

CREATE OR REPLACE TABLE sweetcoffeetree.coffeesales15b.query15 AS

WITH base_data AS (

SELECT

f.location_id,

l.city,

f.product_name,

DATE_TRUNC('quarter', f.order_date) AS sales_quarter,

SUM(f.sales_amount) AS total_sales,

SUM(f.sales_amount * (f.discount_percentage / 100.0)) AS total_discount,

SUM(f.quantity * p.standard_cost) AS total_cogs

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_products p

ON f.product_name = p.name

AND f.order_date BETWEEN p.from_date AND p.to_date

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

WHERE f.order_date BETWEEN '2022-01-01' AND '2024-12-31'

GROUP BY f.location_id, l.city, f.product_name, DATE_TRUNC('quarter', f.order_date)

),

with_profit AS (

SELECT

*,

total_sales - total_discount - total_cogs AS profit

FROM base_data

),

with_yoy AS (

SELECT

*,

LAG(profit) OVER (PARTITION BY location_id, product_name ORDER BY sales_quarter) AS prev_profit,

ROUND(

CASE

WHEN LAG(profit) OVER (PARTITION BY location_id, product_name ORDER BY sales_quarter) = 0 THEN NULL

ELSE 100.0 * (profit - LAG(profit) OVER (PARTITION BY location_id, product_name ORDER BY sales_quarter)) /

LAG(profit) OVER (PARTITION BY location_id, product_name ORDER BY sales_quarter)

END, 2

) AS yoy_profit_pct

FROM with_profit

)

SELECT

city,

product_name,

sales_quarter,

profit,

prev_profit,

yoy_profit_pct

FROM with_yoy;

--Query 16

WITH seasonal_data AS (

SELECT

l.state,

f.season,

p.category,

SUM(f.sales_amount) AS total_sales,

SUM(f.quantity) AS total_units,

COUNT(DISTINCT f.order_id) AS order_count

FROM sweetcoffeetree.coffeesales15b.fact_sales f

JOIN sweetcoffeetree.coffeesales15b.dim_products p

ON f.product_name = p.name

AND f.order_date BETWEEN p.from_date AND p.to_date

JOIN sweetcoffeetree.coffeesales15b.dim_locations l

ON f.location_id = l.location_id

WHERE f.order_date BETWEEN '2023-01-01' AND '2024-06-30'

GROUP BY l.state, f.season, p.category

),

ranked AS (

SELECT

*,

DENSE_RANK() OVER (PARTITION BY state, season ORDER BY total_sales DESC) AS category_rank

FROM seasonal_data

)

SELECT *

FROM ranked

WHERE category_rank <= 3

ORDER BY state, season, category_rank;